The development of the new regulation on the use of artificial intelligence in the Brazilian judiciary.

*Co-authored with Tainá Aguiar Junquilho and Ricardo Villas Bôas Cueva. Originally published in JOTA.

**This is an AI-powered machine translation of the original text in Portuguese.

Resolution 615 of the National Council of Justice (CNJ) has just been published, regulating the use of artificial intelligence in Brazilian courts.For the revision process, a Working Group (WG) was established, composed of judges, academics, and AI and technology experts, to review the previous regulation on the subject in order to address the new challenges brought by generative artificial intelligence (GAI).

In addition to a series of internal meetings and debates, the WG conducted academic studies and empirical research, held public hearings with national and international guests for civil society and expert feedback on preliminary versions of the text, and received several written institutional contributions. This process culminated in a final deliberation by the entire commission at the end of 2024 (shortly after the approval of Bill 2338/23 in the Federal Senate) and in its approval by the CNJ Plenary in February 2025.

Some of the critical issues that prompted the revision of the resolution involved the degree of risk of AI systems developed by the courts and how they should be classified; the level and frequency of GAI usage by judges and court staff; the activities in judicial service provision in which these tools are used, and whether some of these uses should be restricted; and the appropriate level of transparency regarding the use of these tools, both internally and toward litigants and the parties involved in judicial proceedings.

To address these issues, various studies were developed, resulting in the following reports available for consultation: The Use of Generative Artificial Intelligence in the Judiciary and Mapping AI Risks in the Brazilian Judiciary.

The first report surveys regulatory efforts regarding GAIs in foreign courts and presents research findings on the use of this technology in Brazilian courts.

The concern behind the study lies in the fact that GAI’s popularity has decentralized decisions about its use in organizations, and its deployment has ceased to be transparent. Due to the associated risks, it became necessary to track current uses and establish governance policies specific to this technology.

The main ethical concerns regarding its use by judges and staff are related to the risk of automation bias (excessive trust in AI outputs) and lack of transparency. This calls for governance measures to ensure transparency and content review, as well as training to promote proper and responsible use of available tools.

Governance measures regarding courts were addressed in a recent UNESCO report, which particularly recommends transparency about GAI use toward third parties as a form of responsible usage. However, foreign courts that have adopted guidelines or regulations so far do not require disclosure of GAI use in decisions to third parties—only peer-to-peer transparency for internal content review within the court.

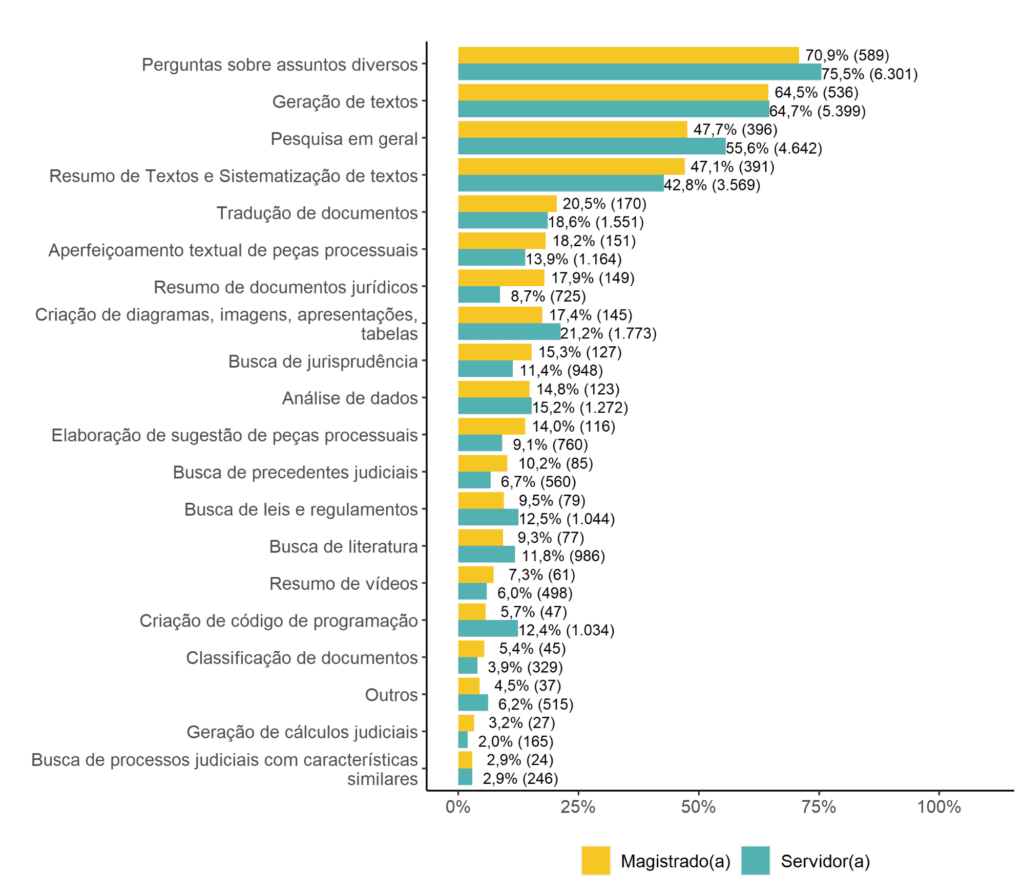

The research, conducted via opt-in interviews and reaching a significant number (around 10% of judges), with good demographic distribution, revealed substantial use of GAIs in Brazilian courts (50% of judges and court staff have used the tool, and around 30% of them use it for judicial services).

Nearly all judges and staff use GAIs privately. No fewer than 98% of respondents believe the tool is useful for professional purposes, and 93% are interested in training courses, indicating a trend of increasing usage.

Most declared uses of GAIs are appropriate—for example, for generating drafts, summarizing documents, and translating. However, there is considerable use of GAIs as general research tools and even for legal precedent searches. The use of GAI for precedent search is not inherently problematic, as long as users are guided to verify the results.

Source: GAI Research Report in the Judiciary (CNJ, 2024)

Judges reported the main difficulties and challenges in using GAIs as lack of familiarity, inaccuracies in generated content, and concerns about the legality and ethics of its use. This last issue can lead to a lack of transparency—symptomatically, about 83% of court staff do not inform judges about their use of GAIs in their work.

The findings highlighted the need to recognize the lawful use of GAIs, along with the implementation of governance measures by courts and training for judges and staff, with an emphasis on transparent use within the organization—ideally through official procurement and provision of GAI tools. However, this requires further development, given the high cost of licenses and the dynamic nature of this market, with new tools being launched constantly.

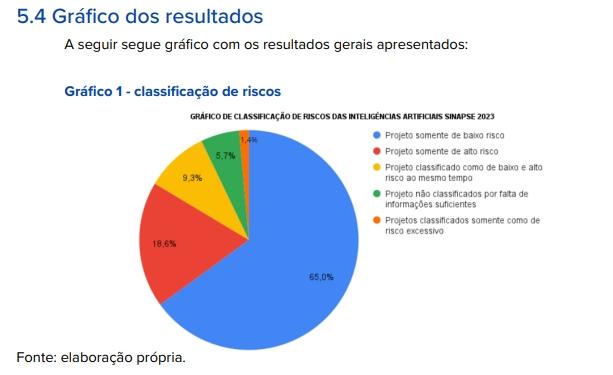

The second report, produced by the AI Governance and Regulation Lab at IDP, mapped 140 artificial intelligence projects developed or used by the Brazilian Judiciary. It used the initial proposal to update CNJ Resolution 332/2020 as a reference to understand the risk category of each system or project registered on the SINAPSES platform.

The analysis showed that most systems (91) were classified as low risk, as they perform administrative support tasks, audio transcription, or general information retrieval, without directly influencing substantive issues or decision-making processes.

Twenty-six projects were identified as high risk, mainly because they exert more direct influence on judicial decisions (e.g., evaluating evidence or suggesting legal norms), thus requiring more robust governance measures such as impact assessments and data source documentation. Two projects were considered excessive risk, as they evaluate individuals’ behavior and characteristics to predict criminal activity.

The study concluded that while there are potentially sensitive initiatives, the majority of ongoing projects do not demand complex governance requirements due to their classification as low risk.

The research also pointed to the importance of more detailed descriptions of each system’s functionalities on the Sinapses platform to increase transparency and enable more precise risk assessments. Another relevant finding was the still limited presence of generative AI solutions in official registries, despite judges already experimenting with these tools individually, as shown in the first report.

The newly approved resolution includes, as key elements: criteria for risk classification of AI systems, with appropriate governance measures to address the inherent risks of these technologies; permission for GAI use provided results are reviewed; and a recommendation to prioritize systems officially provided to judges and staff, enabling greater governance, information security, and transparency in GAI use. It also establishes special care and audits for data protection, prioritizing anonymization (especially in proceedings protected by constitutional confidentiality), and creates a National AI Committee responsible for overseeing, reviewing, and implementing the resolution.

As the studies themselves revealed, this was an unprecedented effort, even globally, considering that the few foreign courts addressing this issue have so far only published general GAI usage guidelines.

In fact, the regulatory process was widely democratic, based on empirical evidence about existing tools, international benchmarking, internal and public debates, resulting in a robust and innovative outcome. It aims to provide the Judiciary with a governance model to mitigate risks and enable the responsible use of artificial intelligence aligned with best practices—always under human supervision, focused on preserving human values and enhancing the work environment for judges and staff.