Artificial Intelligence and the Teaching of Law

*This is an AI-powered machine translation of the original text in Portuguese

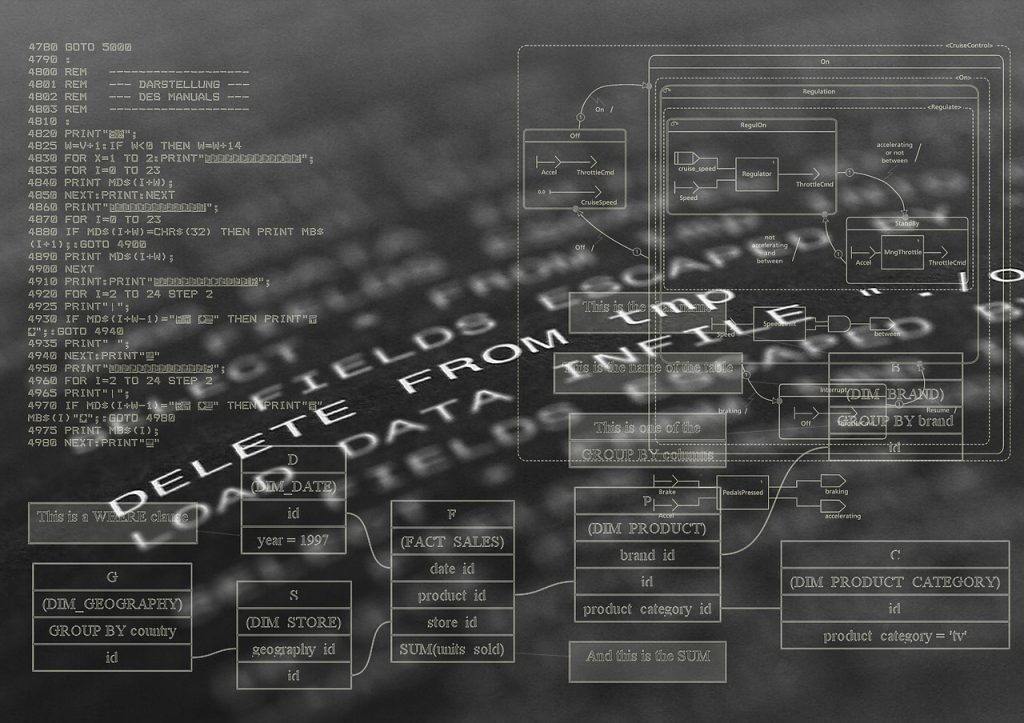

The relationship between computing and law is perceived by jurists as something extremely recent. However, these ties are much older and intertwined with the very foundation of modern law. For example, Hobbes, in Leviathan, started from the fundamental premise that all scientific foundation of politics and law reduced to computation, which applied not only to numbers but to any area of knowledge, as logic was nothing more than adding words to create statements and statements to form syllogisms. Leibniz, a jurist by training, believed that law was nothing more than a combination of concepts, and that identifying those basic concepts to be combined would be the key to building all natural law and codifying the corpus juris civilis, so that the solution to any conflict could be reduced to a calculation of the possible combinations of the presence and absence of those concepts in the case description. He even proposed that each basic concept should be represented by a prime number, which could be formulated in an arithmetic based on only two digits (0,1). After his death, his manuscripts for the Codex, as he called it, were gathered at the University of Halle, where Carl Gottlieb Svarez, one of the main figures in formulating the Allgemeines Landrecht fur die preussischen Staaten, the first codification of German law, would later study.

These traces of "computation" in the very structure of law often go unnoticed by today's jurists, who tend to associate the term with what is processed by a machine made of metal, plastic, and electrical circuits. It's not surprising that they view the possibility of their intellectual work being executed by something like this with great skepticism. In fact, when judges use the metaphor of a robot, it often has a negative connotation, usually to discredit a decision as formalistic, a witness as manipulated, or to mitigate the accused's responsibility. In other words, it is used to indicate a lack of autonomous deliberation.

Artificial intelligence (AI) presents a challenge to this culture, similar to the Darwinian shock. Is there really a discontinuity between humans and machines, or is there continuity and gradation, as shown by the theory of evolution? After all, the concept of a machine, from a mathematical point of view, has nothing to do with the physical support of these operations but consists of a set of abstract functions connecting received information (inputs) with changes in state and the execution of actions (outputs).

As a result, jurists are led, on one hand, to consider which of their tasks could be performed by electronic agents, in other words, what artificial intelligence can do for law (giving rise to a new research area called Artificial Intelligence for Law, or AI&Law). On the other hand, they are prompted to be concerned about the legal implications arising from the entry of these new agents into economic and social relations (creating another new research area that can be called Law of Artificial Intelligence, or AI Law). The interesting thing about these new areas is their intrinsic interdisciplinarity. AI&Law should not be reserved for engineers or computer scientists, and AI Law is not just a matter for jurists.

In Germany, there is already talk of the need to train a new category of professionals, "legal engineers" (legal-tech-blog.de, November 11). This view points to specialization, either for engineers or for already trained jurists. It is directed at meeting the growing demand for this expertise, which is already beginning to appear in Brazil.

However, in the future (not so distant), legal services in a law firm or judicial proceedings will increasingly intertwine with artificial intelligence. Lawyers or judges will shape their services by participating in the customized design of computer tools suitable for their work. In this scenario, it will not be enough to technically specialize a group of professionals; it will require that all jurists have an understanding, in their basic education, of the various logical structures (formal, mathematical) of their inferences in interpreting legal norms or engaging in adversarial argumentation. On the other hand, computer scientists and engineers also need to understand the specific logic of legal language in their education. After all, intelligent electronic agents must incorporate an understanding of the legal system into their design. Instead of learning concepts of civil law, criminal law, and so on, as is typically the case in law courses for other units, engineers and computer scientists will benefit more from understanding the logical structure of legal systems and different types of legal norms, the hierarchical relationship between norms, the potential for conflicts and gaps, how to extract rules from precedents, the structure of the adjudication system, the main types of legal documents, how their arguments are structured, and finally, how adversarial argumentation works in a legal process. In other words, jurists, engineers, and computer scientists must learn a common language, the language of legal logics, so they can exchange and complement their knowledge.

When I speak of the logics underlying the interpretative inferences of jurists or the adversarial dynamics of argumentation, it is important to note the plural. Jurists often limit logic to the idea of subsumption (from fact to norm), with an understanding that does not go beyond Aristotelian syllogistics or rudimentary notions of classical logic, or even argumentation as the study of fallacies. They remain stuck in the state of logic that existed two thousand years ago. Any mention of formal logic is met with resistance, often identifying it, out of ignorance, as "legal formalism." In other words, jurists simply ignore the revolution that occurred in logic in the 20th century, with the emergence of non-classical logics and the development of computer theory.

This creates a serious limitation for jurists in dealing with the reality of AI. Artificial intelligence is, to a large extent, logic applied to the representation of knowledge and inferences by intelligent agents. Or rather, applied logics. And when applied to law, they must be able to represent different types of inferences involved in interpretation and argumentation, whether deductive inferences (when subsumption or derivation of norms whose content is implied by other valid norms occurs), inductive inferences (when jurists generalize general properties of rules in identifying legal principles), abductive inferences (when jurists formulate hypotheses about the legislator's intention or deal with presumptions), or dialogical inferences (when jurists identify which arguments are justified within a set of arguments in a mutual relationship of attack and support). There are different logics to represent different dimensions of these inference processes.

It is also important to understand that AI is not limited to machine learning, nor is it synonymous with products from so-called lawtech companies, as some recent texts in the legal field superficially and even erroneously suggest. In this regard, it is important to highlight that techniques involving natural language processing and machine learning employed in law rarely incorporate the representation of legal knowledge. It is absolutely essential that AI&Law incorporates this knowledge to aim for more ambitious applications. This incorporation serves not only to make their tools more efficient but, above all, so that automatic legal actions and decisions can be explained and justified by the electronic agent itself, which is still a significant challenge today. Unlike other areas of AI application, there is a particular requirement in the field of law: legal decisions only gain legitimacy to the extent that they can explain their reasoning. Dealing legally with decisions made by electronic agents with impacts on individual rights, on the other hand, raises a series of questions for AI Law.

The challenge becomes clearer when we understand the distinction between the design perspective and the intentional perspective to explain machine behavior. When Kasparov plays a game against Deep Blue, he certainly does not plan his moves by making assumptions about which of the thousands of master games stored in its database will be statistically correlated to define the top ten subsequent moves. In other words, even though he knows the details of the program, Kasparov does not adopt the design perspective (the program's instructions) while playing the game because he has a much lower capacity for conscious data processing. He attributes intentionality to the computer, treating it as if it were a human opponent with plans, beliefs, and strategies.

Now, imagine a computer that makes simple legal decisions based on some algorithm using machine learning that classifies its proximity to previous decisions. The correlation elements used to indicate the decision act can differ greatly from what jurists understand as the motivation for a decision. At the International Conference on Artificial Intelligence and Law held in London this year, for example, one of the works presented a machine learning-based system that predicted with a high degree of accuracy whether two U.S. Supreme Court justices would disagree in their votes. But the three most relevant correlation variables for this prediction used by the algorithm were, surprisingly, the justices' seating positions in the court, the size of the vote, and the number of citations. The prediction had nothing to do with the subject matter under discussion or the known conservative and progressive convictions of the examined judges.

In addition to concerns about the training of jurists, law schools, engineering, and computer science programs must be prepared to produce cutting-edge research in the development of AI&Law and AI Law. In this spirit, a group of professors from engineering, computer science, philosophy, and law at USP (University of São Paulo) founded an independent, non-profit association called Lawgorithm to coordinate academic research and university education with practical initiatives in the public and private sectors to develop computer tools for legal activities and to reflect on the legal, social, economic, and cultural implications of AI in general. Other initiatives are also beginning to emerge in the country, with associations of so-called lawtechs or in law schools and computer science and engineering faculties seeking mutual collaboration.

The important thing is that university research and education are open to interdisciplinarity and the combination of these sciences, mathematics, and law, which, although they were intrinsically linked in their modern foundation as products of the same material, have unfortunately come to be seen as incompatible subjects. It is time to rethink this approach.

Originally published in JOTA.