Data of Children and Adolescents in the Digital Environment

*This is an AI-powered machine translation of the original text in Portuguese

** Image resource obtained from Freepik.com

The constant technological advancement and the emergence of new business models and digital services bring both benefits and challenges for children and adolescents. Thus, this scenario requires continuous effort in identifying and mitigating potential risks. The Organization for Economic Cooperation and Development (OECD) recently updated its typology of risks associated with the digital environment for children, highlighting the transformation of old problems such as cyberbullying and inappropriate content, and the emergence of new concerns, such as exposure to fake news and deceptive commercial practices. In parallel, the United Nations Committee on the Rights of the Child elaborated General Comment No. 25, presenting ways for states to implement the Convention on the Rights of the Child in this context.

In this context, preserving the privacy of children and adolescents and protecting their personal data have become increasingly relevant themes. International authorities recommend the adoption of mechanisms that incorporate, by design, the protection of individuals' interests, ensuring easy access to information and transparency. These measures may involve the development of simplified interfaces that allow children and adolescents to exercise their rights, the establishment of stricter privacy settings for this audience, prevention of profiling, among others.

Thus, streaming services, gaming platforms, and social networks have been seeking specific privacy solutions for children and adolescents, providing exclusive usage modes for these categories. In late 2022, Meta announced the implementation of new technological features and settings to protect the privacy of children and adolescents. Investment in security and privacy solutions has been seen as an important competitive advantage by parents and guardians in the choice and use of digital platforms.

In Brazil, the discussion on privacy and data protection for minors was already debating the interpretation of Article 14 of the General Data Protection Law. However, in May of this year, the National Data Protection Authority (ANPD) published its first Statement explaining that the processing of personal data of children and adolescents can follow the legal bases provided in the LGPD, as long as the processing is done in their best interests.

The literal interpretation of the legislation would require obtaining consent from one of the parents or legal guardians, with some specific exceptions. Therefore, the Statement was important to establish a more coherent interpretation, avoiding imposing rules that are difficult to apply in practice for certain services and businesses, while preserving the additional protection that the law intended to establish for this category of individuals.

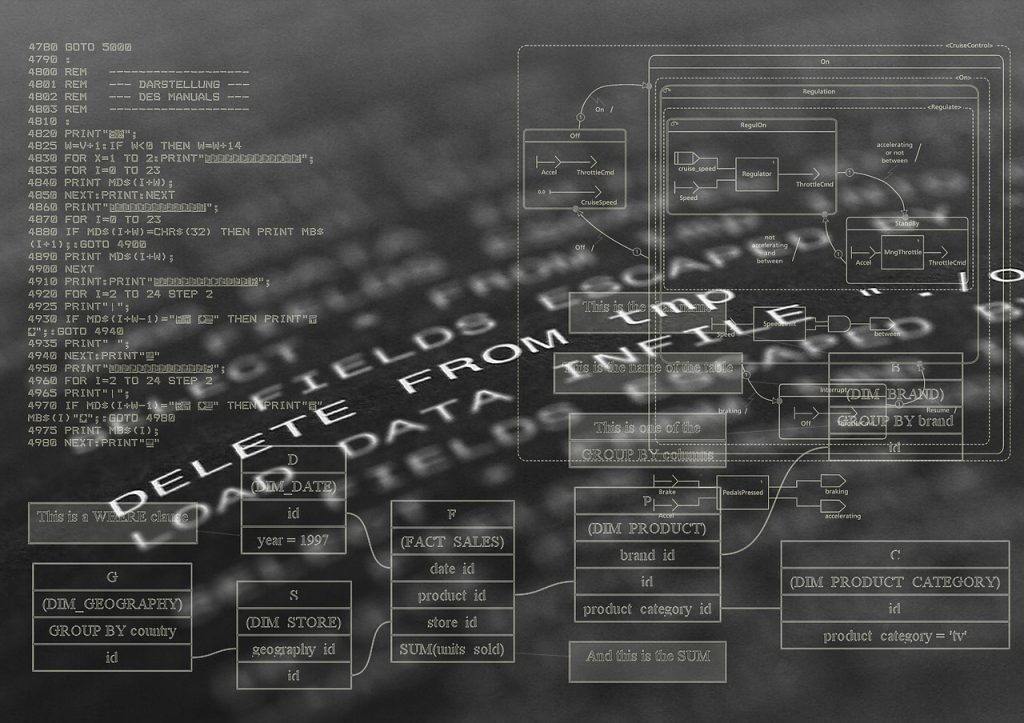

Recently, ANPD published a Technical Note evaluating the processing of personal data by the social network TikTok at the time of registration on the platform. Between July and September 2022, the platform's verification system removed almost 50 million videos for the safety of underage users and deleted 60 million accounts of users identified as under 13 years old from January to September of the same year. In the Technical Note, ANPD considered that acting in the best interests of children and adolescents does not necessarily mean treating fewer personal data. The authority highlighted that a more robust technological tool, which admittedly "probably would treat more personal data," could be more appropriate, even if it meant greater collection and use of these minors' data. This ANPD interpretation may support the development of verification methods that are more data-intensive, suggesting that minimization of information is not an end in itself.

Still regarding Brazil, the Federal Senate is debating the Bill No. 2.628/2022 that seeks to establish specific rules for information technology products and services targeted at or accessed by children and adolescents. The objective of the bill is to ensure the autonomy, safety, and protection of children and adolescents by regulating child monitoring, digital advertising, social media obligations, and communication of violations of the rights of these minors in the use of these products and services.

As new technologies, especially artificial intelligence, continue to develop, questions arise about how they can affect the autonomy of children and adolescents. These tools can offer learning and well-being experiences but can also pose risks such as inadequate data collection, misinformation, and errors. Therefore, regulating this relationship must be constantly evaluated through multisectoral and interdisciplinary approaches, making it possible to provide the benefits of digital environment development while minimizing the associated risks.