To measure, but not to understand: the risks of regulating AI based on isolated data

*Originally published in Correio Braziliense.

**This is an AI-powered machine translation of the original text in Portuguese.

Much has been said about the environmental impacts of artificial intelligence, but quantifying them with precision remains both a technical and political challenge. There is no single answer to the question “what is the impact of an AI model?” precisely because there is no single model, nor a single training location, nor a single usage pattern. Models are created, trained, and deployed in regions with different energy matrices, variable infrastructure, and distinct business practices. A system operating in Brazil, for example, tends to have a substantially lower environmental footprint than a similar one hosted in countries heavily dependent on fossil fuels or facing water scarcity.

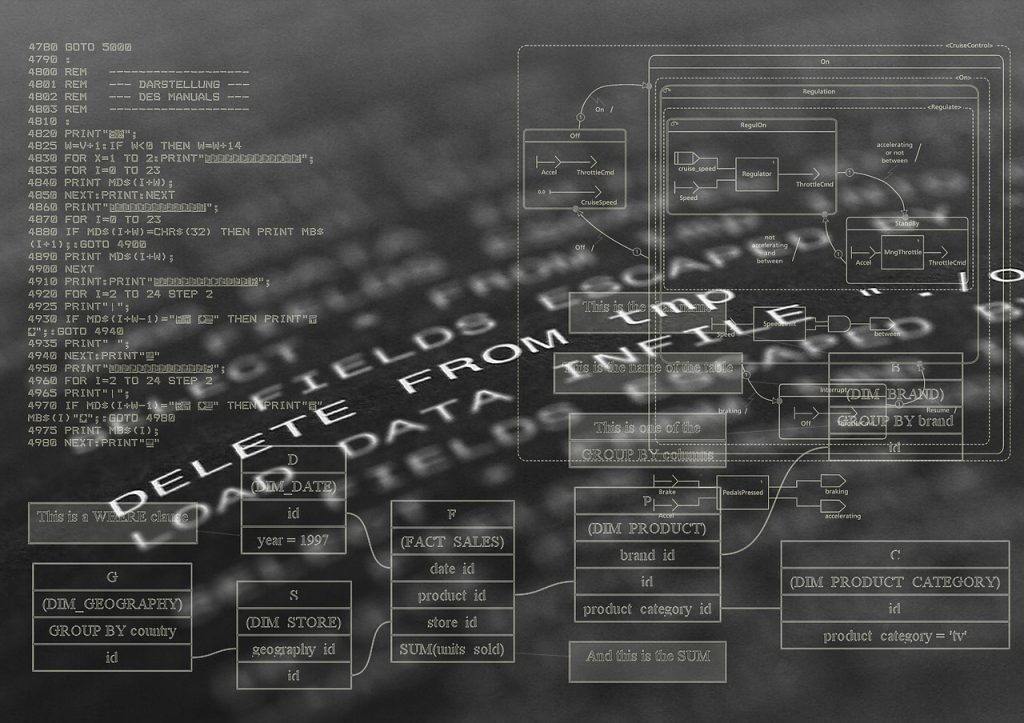

In this context, the report recently published by Mistral AI, in collaboration with the French ecological transition agency (ADEME) and specialized consultancies, marks a significant step forward. The study conducts a full life cycle assessment (LCA) of its models, incorporating not only the energy consumed during training but also the emissions associated with server manufacturing, as well as water and mineral resource use (expressed in Sb eq.).

The data are striking: training one of the company’s models resulted in 20.4 thousand tons of CO₂ equivalent, 281 thousand m³ of water consumed, and 660 kg Sb eq. Each 400-token response generated by its chatbot implies, on average, 1.14 g of CO₂e, 45 mL of water, and 0.16 mg of Sb eq.

Despite its methodological merit and transparency, the study also lays bare the limits of isolated quantification. Human cognition operates with limitations in representing large magnitudes: our Approximate Number System (ANS) loses accuracy as values grow.

Added to this is scope insensitivity, a phenomenon demonstrated in empirical research showing that changes in orders of magnitude — such as between millions and billions — often do not proportionally affect human judgment. Aware of these cognitive limitations in grasping large numerical scales, this challenge has already been illustrated playfully through visual analogies, such as the one comparing a single grain of rice to one hundred thousand dollars, making the scale of one billion dollars more tangible.

In this context, the disclosure of environmental metrics without comparative or referential anchors can lead either to underestimation or to artificial dramatization of impacts. The environmental impact of AI only acquires practical meaning when compared to other activities — preferably everyday ones — that allow for interpreting, comparing, and contextualizing absolute data. Decontextualization, by obscuring the relational dimension of metrics, undermines the apprehension of information.

This does not, however, eliminate the need for caution nor exempt developers and users from mitigation strategies. On the developers’ side, actions include: (i) conducting comprehensive life cycle analyses, including indirect emissions and natural resource extraction; (ii) proportional selection of computational architecture, avoiding overprovisioning and adopting the smallest possible model compatible with the task — right-sizing; and (iii) conscious localization of training phases, prioritizing regions with low-carbon electricity grids and less pressure on water resources. In this sense, the National Data Center Policy plays a strategic role by positioning Brazil as a potential leader in sustainable solutions for AI infrastructure.

On the users’ side — whether individuals or organizations — mitigation involves selecting the appropriate model according to the complexity of the task, avoiding general-purpose systems when leaner or purpose-built systems are sufficient. Queries and prompts can also be grouped, reducing redundant or unnecessary exploratory interactions, thereby contributing to lower computational consumption.

It is important to recognize, however, that the referenced study itself acknowledges significant limitations in its methodology, including the fact that many impact factors must be estimated based on theoretical models and incomplete data, which imposes an inevitable degree of uncertainty.

As AI (particularly generative AI) advances amid ecological transition efforts and in the context of intensifying global climate pressures, the debate over its sustainability demands more than technical metrics. It requires critical contextualization so that decisions on research, implementation, and technological regulation are grounded in information that is understandable, comparable, and embedded within a shared system of responsibilities.