Risks and Opportunities of Regulating Automated Decisions and AI by ANPD

*Co-authored with Rony Vainzof. Originally published in Conjur.

**This is an AI-powered machine translation of the original text in Portuguese.

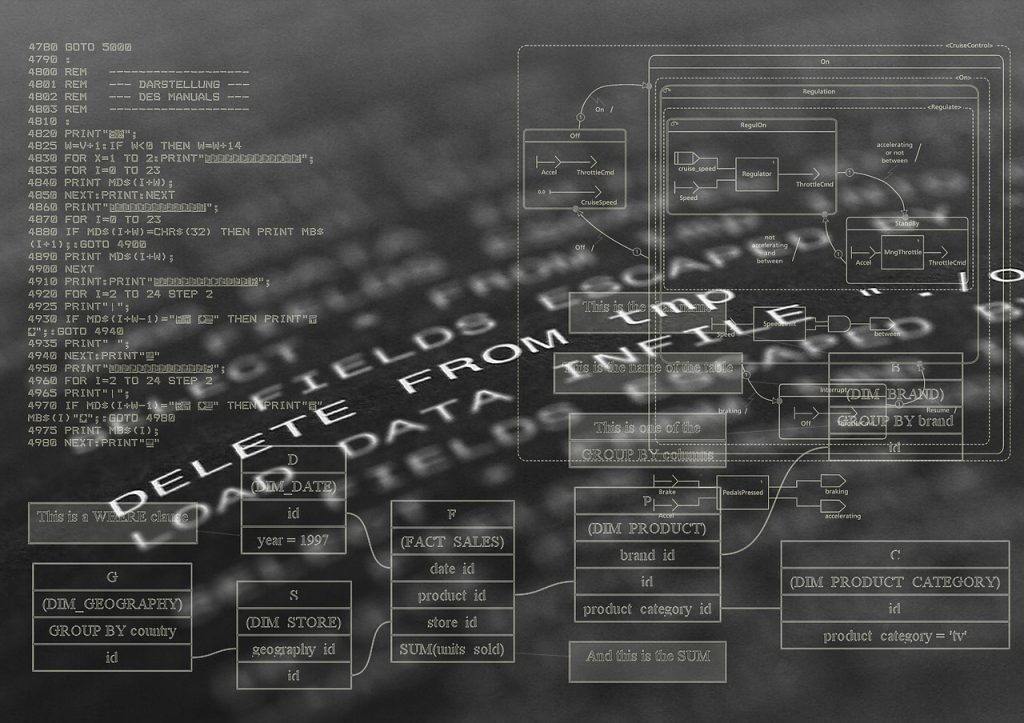

The ANPD (National Data Protection Authority) recently published a Call for Contributions on the interpretation parameters of Article 20 of the General Data Protection Law (LGPD), which addresses automated decisions, focusing on its application to artificial intelligence systems.

Article 20 of the LGPD ensures data subjects the right to request a review of decisions made solely based on automated processing. It provides for the right to an explanation of the criteria used for decision-making in such systems—balanced against the right to business secrecy of those employing such systems—and the possibility of an ANPD audit to examine potential discriminatory parameters in the tools used. It is the only substantive provision in the LGPD that directly provides for the possibility of an audit.

This topic is relevant to various stakeholders, especially those employing automated decision-making in their core online activities through artificial intelligence systems, which may impact access to goods and services. There are critical points for a potential regulation of Article 20, with initial steps taken through the call for contributions.

The first concerns the meaning of "solely" automated decisions. This topic has been widely discussed in European jurisprudence and regulation, considering the significance of automated processes in decisions that may involve humans, as well as the factual possibility of automation biases, effectively delegating decisions to machines.

The second is the scope of the explanation required for automated systems: Does it concern a general explanation of the model or the criteria for decision-making, or the criteria specific to a particular decision? What level of detail should the explanation provide, given business secrecy concerns? Or should the explanation merely enable the affected individual to contest the decision?

A third point involves the methodology for evaluation or auditing to detect biases or discriminatory aspects in the system’s operation. Beyond these issues directly tied to Article 20, the call for contributions also raises questions about the legitimacy of using personal data to train systems, regardless of data subjects' authorization.

These issues touch on the problem of opacity and the difficulty in mapping statistical correlations between input data points and outputs and attributing causal relationships to them, which would enable intelligibility and justification based on the legal grounds authorizing processing under the LGPD. Again, the explainability of decision-making criteria and the justification of the parameters and the system's development are central issues.

The topic of algorithm explainability in automated decisions has already raised numerous questions and decisions in courts, including higher courts, even before the LGPD.

In a landmark judgment by the Superior Court of Justice (STJ) on 11/12/2014 regarding credit scoring,[1] it was decided that the "credit scoring" system is a method developed to assess credit risk, using statistical models that consider various variables and assign a score to the evaluated consumer (credit risk score).

In assessing credit risk, the limits established by consumer protection systems must be respected to ensure privacy and maximum transparency in business relations, as provided by the Consumer Defense Code (CDC) and Law No. 12,414/2011.

On one hand, the methodology for calculating the credit risk score ("credit scoring") is considered a trade secret, and its mathematical formulas and statistical models do not need to be disclosed (Article 5, IV, of Law No. 12,414/2011: "...protecting business secrecy").

On the other hand, it is not necessary to require the consumer's prior and express consent for evaluation, as it is not a database but a statistical model. When requested, this information must be provided to the evaluated consumer, with a clear and precise indication of the databases used (credit history) to enable the individual to verify the accuracy of their data, including rectifying inaccuracies or improving their market performance.

Currently, significant debates revolve around digital service intermediary platforms, transportation services, or marketplaces offering delivery services. The ANPD's position on interpreting the extent of the right to explanation versus the right to secrecy could impact numerous judicial cases, including labor disputes, concerning theories discussed in that field.

The lack of clear regulatory criteria and requirements has led to decisions involving, among other measures, source code disclosure,[2] algorithmic subordination,[3] and account removal decisions.[4]

Although such measures may initially seem aimed at ensuring system transparency and non-discrimination—legitimate and desirable objectives—they may introduce legal uncertainty in developing and applying automated systems and AI without necessarily achieving the values pursued by legislation.

Requiring source code disclosure in certain precedents, as a measure of explainability, does not necessarily ensure transparency regarding algorithmic decisions and could jeopardize intellectual property rights that protect these assets.

Demanding causal explanations for statistical correlations, as noted by the ANPD, could lead to assumptions about subordination relationships, particularly regarding automated decisions directing rides and deliveries for drivers and couriers. Excessive measures in this area, such as for pricing algorithms, might compromise competitive practices for these platforms.

Delving into one such decision by the STJ's Third Chamber on 06/18/2024,[5] it was found that there is a well-founded concern regarding the influence of machine decisions on individuals' lives. Automated data analysis may lead to erroneous assumptions by data controllers, raising risks, especially concerning discrimination, reduced individual autonomy, errors in AI modeling and statistical calculations, or biased outcomes based on outdated, unnecessary, or irrelevant data.

Thus, it was decided that data subjects must be informed about the reason for account suspension and can request a review of the decision, ensuring their right to defense. If an individual’s action is sufficiently severe, posing risks to the platform's operation or its users, immediate suspension is permissible, with the option for later reinstatement requests, ensuring due process.[6]

Respecting due process and the right to defense, platforms can conclude that there was a violation of their terms of conduct and determine account removal, subject to judicial review.

Another aspect is the right to human review of automated decisions, which was not included in the wording of Article 20 of the LGPD. However, European legislation explicitly guarantees this right, limiting it to automated decisions that could restrict individuals' rights or significant economic interests.

Potential regulations under Article 20 may establish scenarios where automated decisions require human intervention. Care must be taken not to confuse human review of automated decisions with human oversight throughout the AI lifecycle or to impose excessive burdens on automated processes, potentially rendering certain applications unfeasible.

ANPD regulation of automated decisions and artificial intelligence is, therefore, essential for promoting legal certainty. The parameters established must be clear, preventing divergent interpretations and considering their implications and impacts on innovation and competitive dynamics across various sectors.[7]

While automated decisions are critical for diverse services and the sustainability of the digital environment—ensuring fraud prevention, individual security, improving credit accuracy, and removing hateful or misleading content from social networks—there must be clarity about the due informational process.

In other words, transparency about the criteria used for decision-making, especially those posing high risks to individuals' fundamental rights and guarantees. Ensuring clarity for citizens and society about decision-making criteria in automated decisions safeguards against potential arbitrariness and harmful biases affecting minority groups.

The guidelines should serve as vectors for building a regulatory environment that balances technological innovation with data subjects' rights protection, preventing legal conflicts, and ensuring responsible technology use.

[1] STJ – REsp: 1419697 RS 2013/0386285-0, Relator: Ministro PAULO DE TARSO SANSEVERINO, Date of Judgment: 11/12/2014, S2 – SEGUNDA SEÇÃO, Date of Publication: DJe 11/17/2014 RSSTJ vol. 45 p. 323 RSTJ vol. 236 p. 368 RSTJ vol. 240 p. 256.

[2] REsp No. 2,135,783/DF, relatora Ministra Nancy Andrighi, Terceira Turma, judgment on 06/18/2024, DJe on 06/21/2024; TJSP; Agravo de Instrumento No. 2073113-21.2021.8.26.0000; Relator(a): Caio Marcelo Mendes de Oliveira; Tribunal: 32ª Câmara de Direito Privado; Foro de Embu das Artes – 2ª Vara Judicial; Date of Judgment: 11/29/2021; TJMG – Apelação Cível 1.0000.23.083409-5/001, Relator(a): Des.(a) Pedro Aleixo , 3ª CÂMARA CÍVEL, judgment on 10/25/2024, publication 10/29/2024.

[3] RRAg-924-88.2022.5.13.0022, 1ª Turma, Relator Ministro Amaury Rodrigues Pinto Junior, DEJT 11/19/2024.

[4] REsp No. 2,135,783/DF, relatora Ministra Nancy Andrighi, Terceira Turma, judgment on 06/18/2024, DJe on 06/21/2024.

[5] STJ – REsp: 2135783 DF 2023/0431974-4, Relator: Ministra NANCY ANDRIGHI, Date of Judgment: 06/18/2024, T3 – TERCEIRA TURMA, Date of Publication: DJe 06/21/2024.

[6] Examples cited include inappropriate driver behavior such as harassment or sexual misconduct, racism, property crimes, physical and verbal aggression, among other issues affecting not only the contractor but also the consumer, their well-being, security, and dignity.

[7] Particularly regarding § 1 of Art. 20: the controller must provide clear and adequate information about the criteria and procedures used for automated decisions when requested, observing commercial and industrial secrets.