The New York Times versus OpenAI

*This is an AI-powered machine translation of the original text in Portuguese.

** Originally published in JOTA.

"Independent journalism is vital for our democracy." The opening sentence of the complaint filed by The New York Times against Microsoft and OpenAI, which make ChatGPT available, is significant. It may mark the beginning of the end for modern copyright protection and perfectly symbolizes the crisis in the modern conception of law as the protection of subjective rights by the state.

The sentence appeals to the survival of an activity (journalism) in the name of an objective value (democracy) rather than the violation of an individual right. It highlights an issue that has already plagued traditional journalism in the face of new media. Producing quality journalistic content, with reliable information and well-founded opinions, is costly but essential for a healthy public sphere capable of controlling the phenomenon of misinformation. However, revenue sources from subscriptions and advertising are compromised when content is aggregated and, at times, made directly available by users themselves in various internet applications.

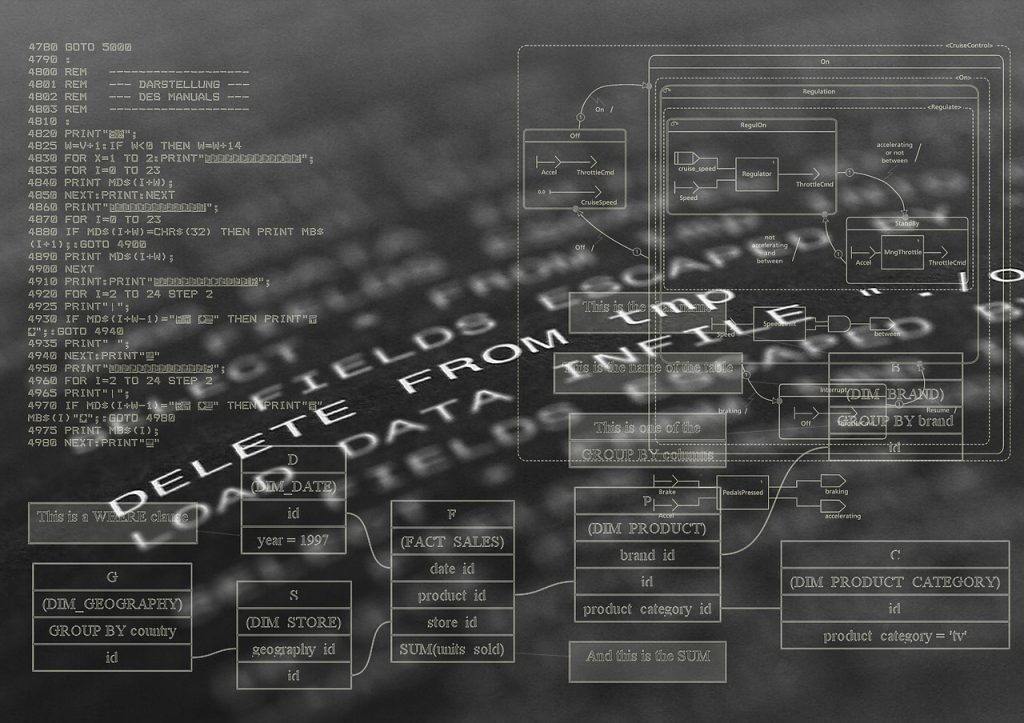

This concern is exacerbated by generative artificial intelligences, which promise to put content available on any open website on the internet in the same critical situation as journalism companies. Chatbots like GPT, Bard, and others, although not strictly research tools, leverage their extraordinary natural language conversation capabilities with searches, aggregation, and synthesis of online content. Their users—many already doing so uncritically—will no longer visit content-producing sites, consuming it indirectly and pre-processed by the chatbots, jeopardizing the business model of internet content applications.

But the law, at least in the adjudication system, was not designed to deal with such a broad issue, and the NYT’s lawsuit bravely tries to reduce an already controversial issue about journalism remuneration in the digital field to a copyright infringement. The problem is that copyright protection is atomized, focusing on unauthorized reproduction of specific (well-defined and identifiable) content created by the author. How can one translate into this framework the provision of a tool capable of creating (not necessarily reproducing) new content through user initiatives (prompts), based on an immense database of which the NYT's content represents a tiny portion?

To illustrate, content created by the NYT represents 1.2% of the sources listed by WebText2, which, with 19 billion tokens, accounts for 22% of the tool's training mix. Common Crawl, which gathers content from various internet links, mainly Wikipedia, with 410 billion tokens, represents 60% of the training mix, and at most, the NYT can indicate that its domain is among the top 15 sources, with approximately 100 million tokens, alongside other journalistic sites and publishers like The Washington Post, Chicago Tribune, Al Jazeera, Springer, FindLaw, etc. Except for artificial prompt mechanisms, it is impossible to single out specific content (whether from the NYT or other sources) in the training database to assign it a determining role in producing any creative output generated by ChatGPT.

The complaint rehearses a series of tentative concepts to approximate a copyright violation. It mentions, for example, “mimicking style” instead of reproducing text. However, copyright law protects the expression of a work in a specific medium, and "style" is closer to an idea or concept, which is not protected. The metaphor of “style copying” has already been questioned in other lawsuits by artists, especially regarding generative image AIs.[1] It also refers to “copying for training” (rather than copying the work) as a form of unauthorized use, but in doing so, it practically admits transformative use. It further mentions “almost-verbatim citations” or “near-syntheses” of originals, which are, obviously, neither verbatim nor identical. At the same time, it incorporates the accusation that ChatGPT generates content falsely attributed to the NYT, which, in fact, contradicts the desired proof of reproduction.

It is not easy to dismiss “fair use” in the case of ChatGPT, and the complaint only attacks the “transformative use” criterion, arguing that there is no transformation and change of purpose when the created products replace The Times and “steal its audience.” Note that this is not about substituting a specific work and using it for the same purpose but about a new way of consuming and processing content through conversation and specific queries. And in most of the content generated by ChatGPT, new information (sometimes incorrect) is added through inferences of words likely to follow the previous text based on billions of examples from various sources comprising the training database. It is difficult to deny a new purpose and a new type of service, even if there is commercial use and market competition.[2]

The other three parameters of "fair use," which under U.S. law, exempt liability, also seem hard to circumvent. The work may not be protected by the nature of the work, for example, if we are dealing with an already published and predominantly factual text (as opposed to fiction), where the use provides a public benefit and access to information. When the amount or substantiality of the portion taken of the work is also not significant, fair use can be recognized, even for commercial use. In common uses of ChatGPT, we would not even have the reproduction of an identified text excerpt from the NYT. The effect on the market would have to show the usurpation of a specific gain opportunity for the protected work, which is different from pointing out, in the general use of the tool, the risk for the entire economic activity of journalism.

The NYT’s complaint, therefore, focuses on examples of “memorization.” Large language models (LLMs) can be fine-tuned with new training on a more specific text base, which can cause, from certain prompts, the chatbot to reproduce significant portions of original texts included in the training base. For example, artificial prompts referencing the specific text and indicating a particular paragraph, requesting the subsequent one, can produce a fairly accurate result, which is practically a retrieval of the original text.

However, this possibility, which would indeed be closer to reproduction, does not address the central problem that could undermine the funding of journalism and even the production of any content on open websites on the internet. Upon closer inspection, retrieval prompts, as exemplified in the NYT’s complaint, could only be executed by users already somewhat familiar with the original text (at the very least, it would be laborious for the user to access all the original content through such a mechanism).

Additionally, the complaint points to searches on Bing, where the result already provides summaries of the text available on the page, a popular utility of ChatGPT that users can subsequently employ (copy the text, insert the link, plug-ins, or the PDF in the prompt, requesting a summary). However, the problem here seems more related to the absence of a hyperlink to the NYT page, remaining a concern already discussed in the issue of remuneration for journalism in digital media.

In this way, the complaint begins with the correct target and raises an alert for general reflection on the future we wish to live in. But its translation into an alleged copyright violation leaves the real problem out, using a framework that no longer fits. What is at stake is the replacement of a cultural practice with the emergence of new media and forms of social communication mediated by machines. The key question is how to direct this new and unavoidable technology in a way that preserves essential values for societal life.

[2] In a recent ruling, the Supreme Court expanded the notion of transformative use by recognizing fair use in Google’s reimplementation of parts of the Java API for the Android platform. Google LLC v. Oracle America, Inc. https://harvardlawreview.org/print/vol-135/google-llc-v-oracle-america-inc/